The benefits of AI are numerous, and as more industries have started using it, the calls for more responsible use of AI have also increased. AI ethics is a topic that has been hotly debated in recent years. There are many different opinions on how to handle the ethical dilemmas that arise with the use of AI.

AI Ethics proposes the creation of safe, fair and ethical AI systems and reduces any harm from the unchecked development of AI. It is the responsibility of data scientists, delivery managers and data engineers to use AI Ethics in all the processes of AI development.

Two Major AI Ethics Concerns Addressed:

1) Bias and Discrimination:

AI systems are responsible for making predictions, classifications, and decisions that will affect the citizens’ lives. AI has the potential to be a great tool for eliminating bias and discrimination, but it can also cause it.

The goal of AI is to make decisions that are as objective as possible and without any biases. However, this is not always possible and biases can be introduced into an AI system by either the data or the programmers themselves. AI bias is a problem because machines are not able to understand the nuances of human language and behaviour.

So AI Ethics proposes that bias and discrimination while programming the AI must be removed to have an unbiased AI system. If the AI is programmed so that bias creeps into its decisions, it will negatively impact society.

2) Invasion of Privacy:

The basic function of AI is to collect data and improve its systems. Collection of this data must be done ethically. The consent of individuals is required before their profiles or data can be used in an AI system.

AI ethics invasion privacy is a great concern for the future of society. The AI systems are getting smarter and more capable, which means they can be used for a variety of purposes. One of these purposes is to invade people’s privacy and collect personal data to use in unethical ways.

The technology is developing at an exponential rate, so it is very hard to regulate the development and use of AI systems. The current laws may not be enough to protect our privacy from these intelligent machines that have been programmed with the sole purpose of invading our privacy.

Three Ethical Principles That can be Used to Guide AI Development:

1) The principle of beneficence, which is about doing good and avoiding doing harm.

2) The principle of autonomy, which is about respecting human dignity and freedom by not treating people as mere means to an end.

3) The principle of justice, which is about fairness and equality in the distribution of goods and opportunities.

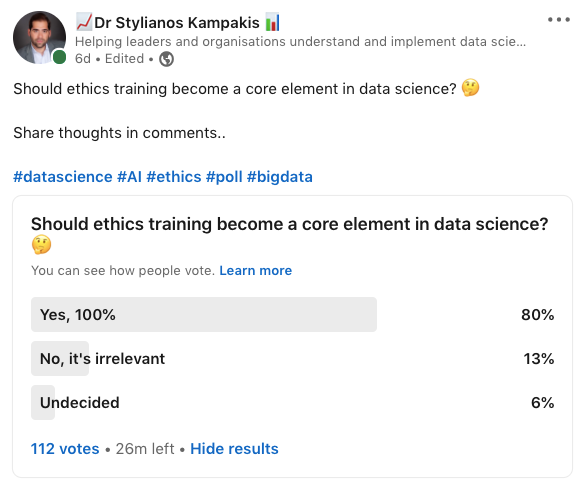

Should ethics training become a core element in data science?

Data science has the potential to make an impact on society, with its ability to influence policy decisions, healthcare, marketing and more. Data scientists are in charge of collecting data, analyzing the data, and interpreting the results.

The problem is that they may not have any training in ethics or how to handle sensitive data. This can lead to mistakes being made when handling sensitive information or even hacking into other people’s computers. This is why it is important for data scientists to learn ethics and how to use their skills in a responsible way so they know how their work can affect people’s lives.

Ethics training could help prevent mistakes from being made by providing guidelines on how to deal with sensitive information.

In a recent LinkedIn poll I raised this key question on “should ethics training become a core element in data science?” The results can be seen below:

Free Webinar: Interpretable AI, algorithmic accountability, and AI ethics:

It’s clear that as AI is playing a larger and larger role in our lives, we face situations where we need to explain how algorithms are making certain decisions. This is especially important in domains like law, medicine and autonomous vehicles.

On this webinar by The Tesseract Academy we are talking about this very important topic and we answer some vital questions such as:

- • What is interpretable machine learning?

- • How can we make algorithms accountable for their decisions?

- • How can we better explain how AI works in critical situations?

We explain why we need explainable AI, some techniques that can be used for explainable AI and what decision makers can do in order to make sure their algorithms remain transparent.

You can access the webinar here.

The AI ethics framework:

AI is becoming such a huge part of our lives, and as it does the ethical implications of the technology become increasingly more important. The AI ethics framework by the Tesseract Academy provides a way to guide AI development ethically and responsibly.

You can access it here.

Videos:

If you have an interest in AI Ethics then check out my interview below with Daniel Bashir where we dived into this topic further and discussed his upcoming book: Towards Machine Learning Literacy.

Additionally, we also held an AI Ethics and Bias in AI panel event last year where we had an exceptional group of guest speakers debate this controversial topic.

More specifically, we are talked about topics like:

- • The AI Ethics frameworks by the ICO

- • Under what circumstances AI can be biased

- • How to fight bias in AI

You can watch it below: