Advances in learning theory

Vladimir Vapnik recently gave a talk about a new theory of learning he is working on. The theory involves the concept of a “teacher” that provides the learning algorithm with privileged examples that can speed up learning. The inspiration came from the observation that humans require far more less examples to learn than current machine learning algorithms. His theory is that this phenomenon is partly due to the fact that the teacher controls the examples that are given to the student, while might also supply the student with privileged information, that even though might not be available at test time, it can be used to speed up training.

Quite interestingly, Joshua Tenenbaum has dealt with the same observation that humans learn much faster. His approach, called Bayesian Program Learning was already discussed in a previous post.

The talk can be found here

Who is Vladimir Vapnik?

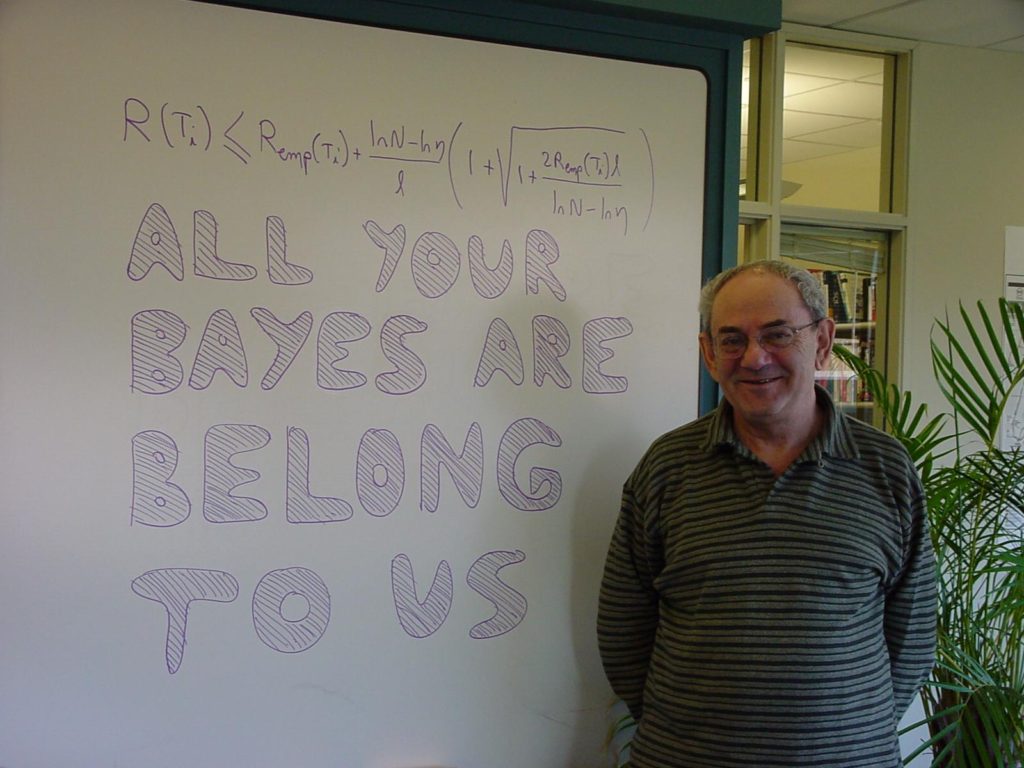

Vladimir Vapnik (born in 1936 in) is the person who discovered one of the most popular algorithms in machine learning: the support vector machine.

He gained his Masters Degree in Mathematics in 1958 at Uzbek State University, Samarkand, USSR. He then worked at the Institute of Control Sciences, Moscow, from 1961 to 1990 where he became Head of the Computer Science Research Department. He then joined AT&T Bell Laboratories at New Jersey, having been appointed Professor of Computer Science and Statistics at Royal Holloway in 1995.

The theory of support vector machines was influenced a lot by his other great contribution to machine learning, the Vapnik-Chervonenkis theory (also known as VC theory). This was the first complete theory of computational learning, which provided theoretical bounds and guarantees for generalisation of machine learning models.

The VC theory of learning is also particularly important, because it managed to provide a alternative to the Bayesian theory of machine learning which had dominated the machine learning community in the 90s. The reason is that the VC learning theory does not require the definition of any prior distributions, and thus provides a more general way to approach the problem of learning. The text on the board is a reference to a classic computer game joke.

If you are interested to read more about the views of great people in data science, then I would suggest you also read the article about Marvin Minsky.