Clustering

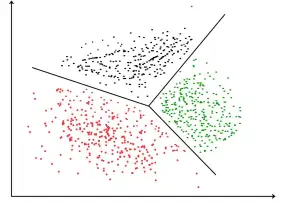

Clustering is one of the most popular applications of machine learning. It is actually the most common unsupervised learning technique.

When clustering, we are usually using some distance metric. Distance metrics are a way to define how close things are to each other. The most popular distance metric, by far, is the Euclidean distance, defined as:

However, sometimes this metric is not a very good choice. This can happen, for example, when the dataset contains a large number of categorical variables.

Why are categorical variables a problem? Let’s find out

Categorical variables

Most algorithms can’t deal with categorical variables directly. So, we are using a process called dummification to turn categorical variables into numerical ones. What this process does, is to convert each category into a binary numerical variable.

The end result is that we end up with a dataset that has far higher dimensionality than the one we started with. If you have 2 categorical variables, with 10 categories each, then you end up with 20 new variables!

The problem in this case is something called the Curse of Dimensionality. In simple words, this means that as we increase the dimensionality of the dataset traditional distance metrics stop behaving in an intuitive way. So, let’s say that we have 5 numerical variables, and then we have 2 categorical variables, which turn into 20 new dummy variables.

The dummies are suddenly represent 80% of the total columns! So, this can distort the similarity between datapoints.

Hence, when we have a large number of categorical variables and categories, clustering can become tricky.

So, what is the solution?

Clustering for categorical variables

When using clustering methods for datasets with lots of categorical variables, there are a few things we can do. First, one thing we can do, is to separate processing for numerical and categorical variables. So, similarity can be calculated separately for numerical and separately for categorical features. Then, we can simply average the two types of similarities together.

So, if you have, let’s say 2 numerical features and 3 categorical, then you can calculate similarity1 for numerical features, and similarity2 for categorical, and simply do something like:

$latex similarity=2similarity1+3similarity2$

Something else that you can do is that you can use distance metrics different to the Euclidean distance. If you go to scikit-learn’s distance metrics page, you can find many different distance metrics, some of which have been created for categorical variables.

So, the main idea behind all these metrics is that they are using concepts from set theory, instead of blindly calculating a distance. All of these metrics, in one way or another, measure the number of common categories between different data points. This is a more intuitive and common sense way of approaching the similarity between categorical variables.

Conclusion

So, you now know what to do, next time you are up against a clustering problem on a dataset with many categorical features.

If you have any questions about machine learning and data science, feel free to reach out to me, and also make sure to check out some of my courses and events, which I am organising on a regular basis.