Artificial intelligence (AI) has swiftly become integral to our online experiences, shaping everything from search engine results to social media feeds and personalized recommendations. As AI’s presence grows, so does the urgency to address the ethical implications surrounding its development and deployment. This article delves into the critical importance of ethical considerations in AI, exploring its potential benefits and risks.

AI Potential Biases and Risks

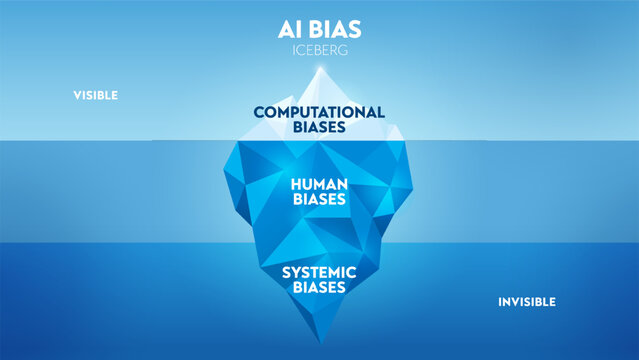

Imagine an AI system that makes important decisions about your life, but it turns out to be biased against you. This isn’t science fiction. The current state of AI bias is a real problem that can have serious consequences.

AI bias can creep in at different stages. Sometimes, the data used to train AI systems is biased, reflecting societal inequalities. For instance, a facial recognition system trained on mostly light-skinned faces might struggle to identify people with darker skin tones accurately.

Bias can also be built into the algorithms themselves. If an algorithm prioritizes certain factors unfairly disadvantaging certain groups, it can lead to biased outcomes. For example, an automated loan approval system might consider zip code as a factor, which could disproportionately reject applications from lower-income neighborhoods.

The results of AI bias aren’t just technical glitches. They can translate into real-world problems like discrimination in loan approvals, hiring processes, or criminal justice. A 2021 MIT study found that racial bias in a risk assessment tool used in US courts resulted in Black people being twice as likely to be incorrectly flagged as high risk for recidivism compared to white people. This can lead to harsher sentences and perpetuate racial inequalities in the justice system.

Why Ethics Matter in AI

Building AI with ethics in mind isn’t just about doing the right thing; it’s about unlocking AI’s true potential to make a positive impact. Ethical AI means fairness, transparency, and taking responsibility for how AI is used. This can lead to solutions for big problems we face globally, like unequal access to healthcare or climate change.

Think about it this way: if people trust AI, they will likely use it. Ethical AI builds trust by ensuring the technology works fairly for everyone and that people understand how it arrives at decisions. A McKinsey & Company study found that 8 in 10 executives believe AI can improve their industry’s ethics. Ethical AI also makes AI systems more trustworthy.

By understanding how AI decisions are made, users feel more comfortable interacting with them. This can lead to a safer and more positive online experience for everyone.

Building Trustworthy AI Systems

Creating ethical AI and addressing AI bias require a careful, step-by-step process. This means following key ethical principles at every stage of an AI system’s development, from gathering data and training the model to using it in the real world and then keeping an eye on its performance.

Here are some key steps to consider:

- Data Collection: Ensuring diverse sources for training AI systems is crucial to mitigate bias. For instance, training a facial recognition system solely on images of young white men would lead to ineffective results for individuals from diverse backgrounds. Librarian degree programs and stakeholders are pivotal in intervening in AI’s societal transformation by advocating for and implementing inclusive data collection practices.

- Model Training: We can use techniques to identify and reduce bias in the AI model during training. This might involve using unique algorithms or checking the model’s outputs for signs of unfairness.

- Deployment and Monitoring: Once the AI system is up and running, it’s essential to have people overseeing its work. This helps ensure the system is being used fairly and isn’t causing unintended harm. We can also monitor the system’s performance over time and adjust if needed.

Stakeholders in Ethical AI: Shared Responsibility

Building ethical AI isn’t a one-person job. Everyone involved, from the creators to the users, has a role to play.

- Developers: The people who design and build AI models have a critical role. They must be aware of potential biases in their data and their chosen algorithms. This means actively checking for and mitigating bias throughout the development process.

- Companies: Businesses that use AI technology must implement strong ethical frameworks. These frameworks should be clear guidelines for how AI is developed and used within the company. They should ensure AI is implemented responsibly and doesn’t worsen existing inequalities.

- Governments: Governments have the power to shape the future of AI through regulations. These regulations can ensure transparency (being transparent about how AI works), accountability (holding developers and companies responsible for AI’s actions), and minimizing potential harm to society.

- Users: Everyone who interacts with AI-powered technology is a stakeholder. By staying informed about AI and its potential biases, users can be powerful advocates for fairness and transparency. They can also use AI services from companies that prioritize ethical considerations.

According to Thomson Reuters, steps are being taken to address the societal repercussions of AI through regulatory measures. Government authorities are striving to safeguard the interests of the public and the economy while fostering trust in AI, ensuring that these regulations do not stifle the innovative potential of this burgeoning technology.

The Evolving Landscape: Regulation and Oversight

AI rapidly changes the world, and governments are scrambling to keep up. Many countries are working on regulations to ensure AI is used fairly and safely. However, regulating AI is a complex task.

Here’s why:

- AI advances quickly: New AI technologies always emerge, making it hard for regulations to keep pace.

- AI is global: AI systems can be developed in one country and used in another. This makes it difficult to enforce regulations on a single national level.

International cooperation is crucial. Countries must work together to develop joint ethical AI development and use standards. This will help to ensure that AI benefits everyone, everywhere.

According to a 2023 OECD survey, 80% of member countries are developing or have adopted national AI strategies. This highlights the growing global focus on establishing frameworks for responsible AI.

(Source)

The Future of Ethical AI: Challenges and Opportunities

Building ethical AI isn’t a one-time fix. There are ongoing hurdles to address. Here are a few:

- Job displacement by AI: As AI changes the workplace environment, many worry that AI will take their jobs. We need solutions to ensure a smooth transition for workers affected by automation.

- The ethics of autonomous weapons: Imagine weapons that can choose their targets without human intervention. This raises serious ethical questions about who’s responsible for their actions and the potential for misuse.

However, there’s also good news! New technologies like Explainable AI (XAI) are being developed. XAI helps us understand how AI systems reach their decisions. This can make them more transparent and trustworthy.

The field of AI ethics is constantly changing. We must keep learning and adapting to the new challenges as AI becomes more sophisticated and integrated into our lives. By working together, we can build a future where AI benefits everyone.

Conclusion

In closing, the journey towards ethical AI is multifaceted and ongoing. We can steer AI development towards a more ethical trajectory by acknowledging the risks of bias and embracing the potential for positive impact.

Collaboration among stakeholders, informed by ongoing dialogue and a commitment to shared principles, is essential in building a future where AI serves humanity responsibly. Let us heed the call to action and collectively strive towards a more ethical and inclusive digital future.