AI detectors (also called writing of AI or content of AI detectors) are the tools designed to detect when a text was partially or completely generated by artificial intelligence (AI) tools such as ChatGPT, Gbard, etc. AI detectors can be used to detect when a blog article or any other writing is likely to have been written by AI tools.

For example, this is useful in educational institutions where the teachers want to check that their students are doing their writing or Sellers try to remove fake product reviews and other spam content.

Moreover, these AI detector tools are new and experimental and they are generally considered as somewhat unreliable for now. Below we explain how they work, how reliable they are, and how they are being used by us.

How Does an AI Detector Work?

AI detectors are mostly based on language models which are similar to those used in the AI( Artificial Intelligence) writing tools they are trying to detect.

The language model essentially looks at the input and asks “Is this paragraph or article or writing written by you?” If the answer is “yes” it concludes that the writing is probably written by Artificial Intelligence (AI).

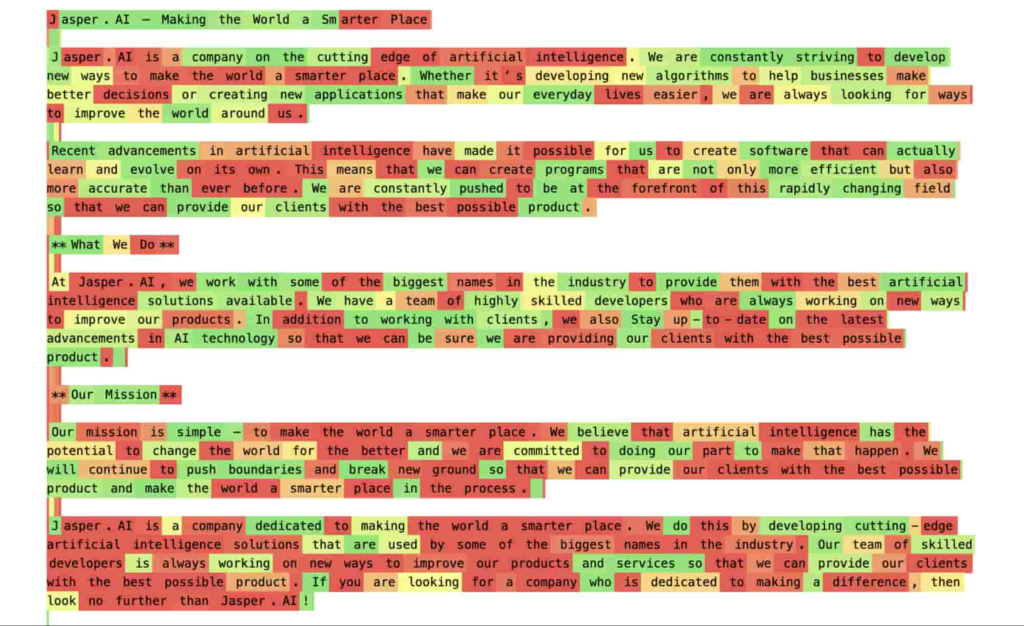

Somehow AI detectors identify AI content in percentage. For example, 40% of points depict that content is AI-generated. While 60% indicate that content is written manually. Sometimes you get astonished by AI detectors when it infer that 40% of content is written by AI but in reality, you wrote 100% data manually, but it’s not a false positive.

Specifically, these models look for two things in a given text: perplexity and burstiness. The lower these two variables available in the text, the more likely the text is to be generated by Artificial Intelligence (AI). But the question is what do these unusual terms mean?

Perplexity

Perplexity is a measure of how unpredictable a text is: how likely it is to perplex (confuse) the average reader ( make no sense or read un-naturally).

- AI language models targeted to produce text or writing with a low value of perplexity, which are more likely to make sense and read smoothly but are also more predictable.

- Human writing on its own tends to have a higher value of perplexity: more creative language choices and creative content.

- Low perplexity is taken as evidence that a text is generated by Artificial Intelligence.

Burstiness:

Burstiness is a measure of variation in sentence structure and its length, it works something like the same perplexity, but on the level of sentences rather than words.

- A text with smaller variations in sentence structure and length of sentence has low burstiness.

- A text with greater variation has high burstiness.

AI text tends to be less “bursty” than human text. Because the language models predict the most predicted word to come next, they tend to produce sentences of average length (say, 15–20 words) and with conventional structures.

That’s why AI (Artificial Intelligence) writing can seem monotonous sometimes. Low burstiness indicates that textor writing is likely to be generated by Artificial Intelligence (AI).

How AI detector is Reliable

In our experience, AI detectors work well. They work well, especially with longer texts, but they can easily fail if the AI output is prompted to be less predictable or is edited or paraphrased after being generated by AI.

AI detectors can easily misidentify written text by humans as generated by AI if it happens to match the criteria (low perplexity and burstiness).

Our research into the best AI detectors shows that no tool can provide complete accuracy means in the research the highest accuracy we found was 83.5% in a premium and paid tool or 67.5% in the best free tool of AI detector.

These AI Detector tools show how much text in the article was generated by Artificial Intelligence like ChatGPT. But we advise against treating them as evidence on their own. As the language models continue to develop, likely, AI detection tools will always have to race to keep up with them.

Even the more confident in providing usually admit by providers that their tools can’t be used as definitive evidence that the given text is AI-generated or not, and the universities so far don’t put much faith in them.

How AI Detectors are Accurate

We have a deep study that looks at our and the accuracy of AI Detectors of other companies. But in a nutshell, all detectors are not the same and their usefulness depends on your use-case.

The accuracy of claims made by any detector that does not provide data to support that claim should be taken with a grain of salt.

Not all the detectors are perfect and all will have some percentage of false positives (a false positive is when the detector incorrectly thinks a written article by a human was generated by AI) and also false negatives.

Conclusion

Thus, AI detectors have helped university instructors and academia detect the originality of content written by students or content creators. Although AI detectors work it depends upon your accuracy requirements.

For prime quality results, perfection and accuracy AI detectors are not suitable to use. But if you want results with a probability of 95% accuracy and under 5% false positives or just to recognize whether the content is handwritten or AI-generated, these detectors will work for you to figure out your queries.