As the years pass, more and more AI Open source communities are emerging and contributing to the AI landscape.

A prominent name in the AI Open source landscape is Hugging Face. Hugging Face was named after the Hugging Face emoji.

It was founded by Clément Delangue and Julien Chaumond in 2016. It started out as a chatbot company and later became known in the AI community as an open-source provider for NLP technologies.

Currently, its models are being used by Tech Giants, such as Microsoft Bing, in production. Hugging Face has come a long way. From being known as just a chatbot company to helping developers use conversational AI methods such as BERT, GPT, and XLNET.

Image source: Depositphotos

In this article, we’ll do a Machine Learning Tutorial on how to use Hugging Face with Python.

Hugging Face Machine Learning Tutorial

Hugging Face has become a hub of vast models and datasets available for public use. It standardized all the steps involved in the pertaining and model training.

Anyone can use the datasets and models provided by Hugging Face via simple API calls.

Hugging Face has provided a hub for data scientists from all over the globe to get together, and share datasets and models.

Hugging Face has made it easier for data scientists to collaborate with each other remotely and benefit from each other’s research.

Hugging Face Models

Whenever you create a new model, they’re like a new repository similar to Git.

You can create branches and make versions with them.

You can keep your models public, and available for Hugging Face members or keep them private to you or your organization.

Hugging Face Datasets

Similar to models, you can create new datasets and set them to public or private.

Hugging Face Spaces

Hugging Face Spaces are a way to demonstrate your demo projects.

You can use Spaces to showcase your portfolio and collaborate with your colleagues or with other fellow engineers remotely.

Let’s see how we can use Hugging Face with simple API calls.

Using Hugging Face, we will be performing Named Entity Recognition.

Machine learning tutorial: Named Entity Recognition

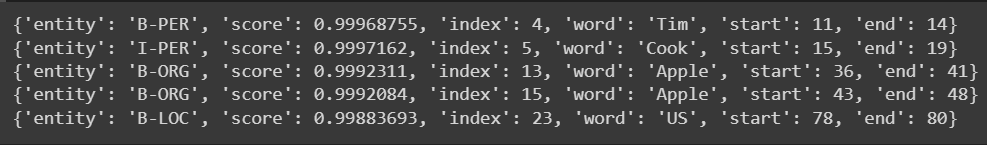

Named Entity Recognition is a problem in which we recognize entities in the given text. In our machine learning tutorial, we will be recognizing entities such as Person, Location and Organizations.

Let’s take a look at the example below:

Import the necessary libraries.

from transformers import AutoTokenizer, AutoModelForTokenClassification from transformers import pipeline

Now load the pretrained tokenizers and model.

tokenizer = AutoTokenizer.from_pretrained("dslim/bert-base-NER")

model = AutoModelForTokenClassification.from_pretrained("dslim/bert-base-NER")

Now let’s create a pipeline using the pretrained tokenizer and model.

nlp = pipeline("ner", model=model, tokenizer=tokenizer)

example = "My name is Tim Cook, I'm the CEO of Apple. Apple has it's headquarters in the US."

Now load the pretrained tokenizers and model.

tokenizer = AutoTokenizer.from_pretrained("dslim/bert-base-NER")

model = AutoModelForTokenClassification.from_pretrained("dslim/bert-base-NER")

Then apply the model on the example text and print the results.

ner_results = nlp(example) for item in ner_results: print(item)

Let’s see the results now.

Machine Learning Tutorial for Text Summarization

Next Summarization is another domain of NLP in which we can summarize large articles and reduce them to a few sentences using models.

For this purpose, we will be using Facebook’s pre-trained BART Large which was fine-tuned on CNN Daily mail.

Let’s take a look at how how simple it is to use Facebook’s text summarization model with a simple API call. First import pipeline

from transformers import pipeline

Load the model that you wish to use.

model = "facebook/bart-large-cnn"

Use the pipeline API to load the summarization model you want to use.

summarizer = pipeline("summarization", model=model)

This is the sample article we gave to our model.

ARTICLE = """The tower is 324 metres (1,063 ft) tall, about the same height as an 81-storey building, and the tallest structure in Paris. Its base is square, measuring 125 metres (410 ft) on each side. During its construction, the Eiffel Tower surpassed the Washington Monument to become the tallest man-made structure in the world, a title it held for 41 years until the Chrysler Building in New York City was finished in 1930. It was the first structure to reach a height of 300 metres. Due to the addition of a broadcasting aerial at the top of the tower in 1957, it is now taller than the Chrysler Building by 5.2 metres (17 ft). Excluding transmitters, the Eiffel Tower is the second tallest free-standing structure in France after the Millau Viaduct. """

We can specify the maximum and minimum length and also whether we want to do sampling or not.

results = summarizer(ARTICLE, max_length=130, min_length=30, do_sample=False)

Now let’s see the results.

print(results[0]["summary_text"])

Results

The tower is 324 metres (1,063 ft) tall, about the same height as an 81-storey building. It is the tallest structure in Paris and the second tallest free-standing structure in France after the Millau Viaduct.

In this machine learning tutorial, we saw how we can leverage the capabilities of Hugging Face and use them in our tasks for inference purposes with ease.

We learned what models, datasets and spaces are in Hugging Face. We went over how we can use them.

Then, we went over how to utilize Hugging Face models in our python code.

Feel free to reach out if you liked this machine learning tutorial on Hugging Face. Get in touch to find out how we can help you.