By Dr Stylianos Kampakis

Tokenomics is one of the most important parts of any Web3 project.

At its core, tokenomics encompasses the strategies and mechanisms by which tokens are issued, distributed, and managed, directly impacting the sustainability and growth potential of the project.

This includes not just the initial allocation and issuance of tokens through mechanisms such as token offerings or airdrops, but also the long-term considerations such as governance structures, incentivization models, and market dynamics.

In crafting effective tokenomics, projects must balance various elements such as supply caps, token burning protocols, staking rewards, and transaction fees. These elements work together to create an ecosystem that encourages participation, rewards contributors, and aligns the interests of stakeholders with the overall health of the platform.

This is not an easy task.

I have spent a good portion of the last 6 years of my career researching into token economies. From agent based modelling, to tokenomics auditing, to the Tesseract Academy’s tokenomics course, I have been at the forefront of this field.

TokenLab is my latest innovation, and I am very happy to be sharing this project with the Web3 community!

TokenLab Principles

Tokenlab is a library for simulating token economies based on agent based models. It abides by the following principles:

- Modularity: The different components can be merged as the user sees fit.

- Explicitness: The assumptions behind the different modules are stated clearly, as well as any limitations.

- Intermediate-level-of-abstraction: TokenLab is focused on simulating the actions of aggregate groups of agents (e.g. a certain user cohort), instead of individuals.

- Focus on the economy: TokenLab’s tools are focused on answering questions about a token economy, and performing things such as stress tests.

- Flexibility: TokenLab is designed in a way that it offers maximum flexibility to those who desire it. It can support logical flows, or arbitrary mechanisms within its simulations, for any kind of agent action.

TokenLab example use cases

So, let’s see how these principles apply in practice.

First of all, install TokenLab

pip install tokenlabThen go through all the basic imports and some basic parameters

from TokenLab.simulationcomponents import *

from TokenLab.simulationcomponents.usergrowthclasses import *

from TokenLab.simulationcomponents.transactionclasses import *

from TokenLab.simulationcomponents.tokeneconomyclasses import *

from TokenLab.simulationcomponents.transactionclasses import *

from TokenLab.simulationcomponents.agentpoolclasses import *

from TokenLab.simulationcomponents.pricingclasses import *

from TokenLab.simulationcomponents.addons import AddOn_RandomNoise, AddOn_RandomNoiseProportional

from TokenLab.utils.helpers import *

ITERATIONS=60

HOLDING_TIME=1.1

SUPPLY=10**8

INITIAL_PRICE=0.1Agent pools

TokenLab is based a modular logic. A token economy is composed of agent pools that can have various dependencies with each other.

The most basic agent pool contains a group of users who act independently from each other. This, for example, can be a group of consumers, who independently decide to buy a token in order to access a good or a service.

Agent pools are using Controller objects for their inside parts.

The simplest type of agent pool is the AgentPool_Basic type. It assumes that there are only two Controller objects needed: one to simulate the number of users, and one to simulate the volume of transactions.

We can also simply provide a fixed number to each one of those parameters. This is a very simple case, where we assume we have a steady number of users, and that the total value of transactions at each unit of time is simply users * transactions_controller

ap_fiat=AgentPool_Basic(users_controller=10000,transactions_controller=1000,currency='$')Currency and agentpools

In this particular example, we are setting the currency to be in USD which is the default option for token economies. When creating an agent pool we can either denominate in USD or in the token. An AgentPool_Basic object is used to generate transactions. When these transactions are denominated in fiat, then the token economy will convert them internally into tokens, when calculating price and other parameters.

If the agent pool’s currency was denominated in token, then it take the current price, and run calculations to understand the transaction volume.

It’s usually easier to make assumptions for the demand of a token based on fiat, rather than a token. However, an agent pool with the token as a currency can be used when we are simulating pools like speculators of the token.

te=TokenEconomy_Basic(holding_time=HOLDING_TIME,supply=SUPPLY,token='tokenA',initial_price=INITIAL_PRICE)

te.add_agent_pools([ap_fiat])

meta=TokenMetaSimulator(te)

meta.execute(iterations=ITERATIONS,repetitions=50)

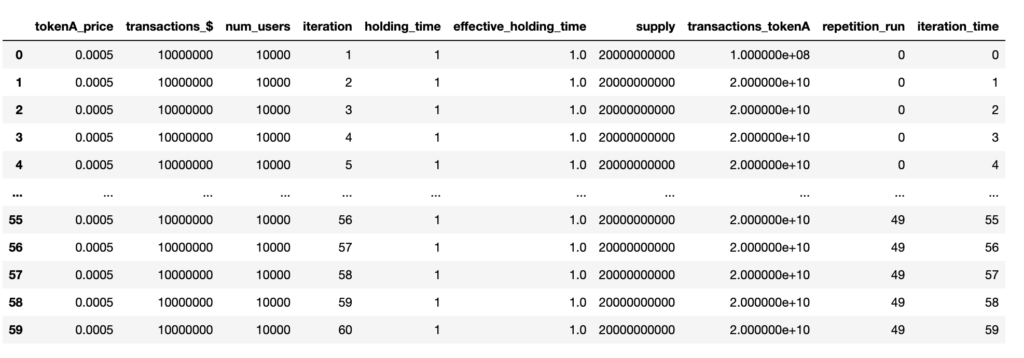

reps=meta.get_data()Now if you type ‘reps’ you should see something like the following table

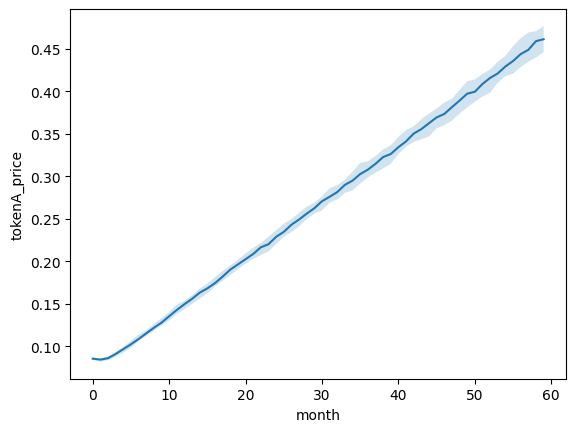

We can use the get_timeseries() method for any of the columns produced by the metasimulator, in order to get a graph averaged across all repetitions/runs.

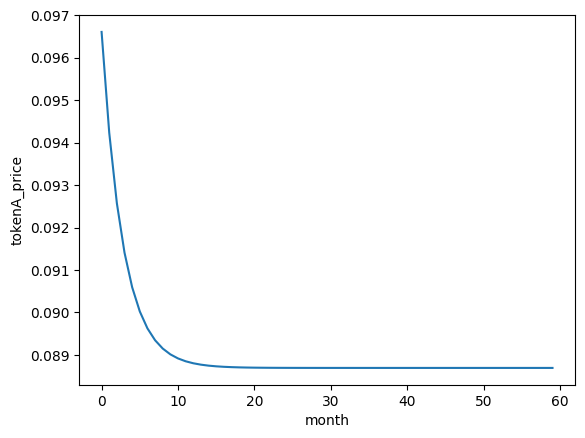

As you can see below the price slightly drops to the fair value of the token. This happens because the total supply is slightly higher than it should be for the current transaction volume, if the token is to preserve its price.

plot,data=meta.get_timeseries('tokenA_price')

plot

Adding variable components in simulations

Now let’s try something more interesting. Let’s simulate steady user growth.

Let’s try to use usergrowth_spaced class to create a user base that is growing.

That class is using functions from utils.helpers and numpy in order to simulate smooth user growth. Let’s see what this looks like below. The class requires you to pre-specify the initial and final number of users, and use one out of three functions:

- log_saturated_space: This is a function that creates a logarithmic growth, that abides by a saturation effect.

- np.linspace,np.logspace,np.geomspace: these are numpy functions for linear, logarithmic and geometric growth.

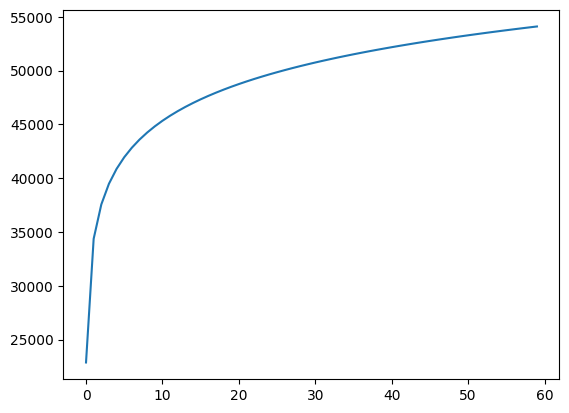

In the example below, we will use the log_saturated_space. Given than that we have only one pool, and that the transaction size is constant (total amount of transactions is simply users * averagetransaction) , then we see that the value of the token appreciates in the same way as the user base grows. Again, this is a very basic example, which would be unlikely to take place in the real world, but is useful for demonstration purposes.

usm_fiat=UserGrowth_Spaced(100,54000,ITERATIONS,log_saturated_space)

ap_fiat=AgentPool_Basic(users_controller=usm_fiat,transactions_controller=1000,currency='$')

plt.plot(usm_fiat.get_users_store())You should see a plot like the one below

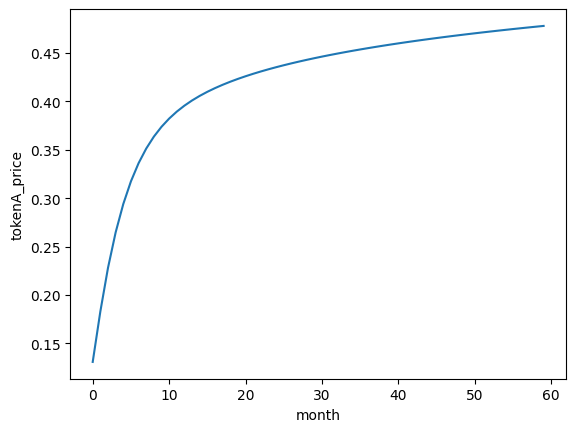

Now run the simulation again and plot the price as before. You should be seeing the following plot

Adding stochastic components

Now let’s try to also include a stochastic component in transactions. For this purpose we will use the TransactionManagement_Stochastic class. This is one of the more complicate classes in TokenLab. The class works as follows:

- You can define the activity_probs which determine the % of users which are active at any given time. This % is sampled from a binomial distribution.

- You can define a distribution for the average value of the transaction per iteration. This can be any distribution with location and scale parameters from scipy.stats. By default, the distribution is the Gaussian. In this example we’ve set the average value to be 1000, and the standard deviation to be 200.

- You can define the number of transactions per active user. By default, this is done through a Poisson distribution. In this example, we define the parameter mu=1.

You will see that now the price fluctuates more wildly as transactions are stochastic. However, because the activity probabilities rise over time (users become more active), the expected average price per month follows a rising trend.

usm_fiat=UserGrowth_Spaced(100,54000,ITERATIONS,log_saturated_space)

tsm_fiat=TransactionManagement_Stochastic(activity_probs=np.linspace(0.25,1,ITERATIONS),

value_dist_parameters={'loc':1000,'scale':200},

transactions_dist_parameters={'mu':1})

ap_fiat=AgentPool_Basic(users_controller=usm_fiat,transactions_controller=tsm_fiat,currency='$')

te=TokenEconomy_Basic(holding_time=HOLDING_TIME,supply=SUPPLY,token='tokenA',initial_price=INITIAL_PRICE,supply_is_added=False)

te.add_agent_pools([ap_fiat])

meta=TokenMetaSimulator(te)

meta.execute(iterations=ITERATIONS,repetitions=50)

reps=meta.get_data()

plot,data=meta.get_timeseries('tokenA_price')

plotYou should get the following plot

Congratulations! Now you know how to run basic stochastic simulations in TokenLab!

Summary: Agent based modelling for web3 token economies

Tokenomics is a vast new emerging field. TokenLab simplified the creation of agent based models for token economies, and streamlines their analyses.

In this tutorial, we just scratched the surface of what TokenLab can do. Nevertheless, all the basic elements are in this tutorial:

- Agent pools

- User growth controllers

- Transactions controllers

- Token economy objects

In subsequest tutorials we will delver deeper into TokenLab and demonstrate how we can better utilise its stochstic capabilities.

If you are interested to know more about tokenomics make sure to get in touch.