Authors: Linas Stankevicius, Stylianos Kampakis, Octopus

Contents

• Abstract

• Origins of Structural Analysis

• Example of Structural Analysis

• Structural Analysis in Complex Systems

• Complexity of Token Economies

• Structural Analysis in Token Economies

• Non-Technical

• Technical

• Conclusion

Abstract

In this article, we will explore the concept of Structural Analysis in both engineering and economic systems. Later, we will examine how to adapt structural analysis to token economies with the goal of identifying weak links and vulnerabilities.

Origins of Structural Analysis

Structural analysis originates from civil and structural engineering. It determines the effects of loads on physical structures and their components. This type of analysis is applied to structures that must withstand loads, including buildings, bridges, vehicles, machinery, furniture, attire, soil strata, prostheses, and biological tissue.

Engineers frequently decompose complex structures into simpler components to assess and design each part individually. This approach is termed the “divide and conquer” method in structural analysis and design. By doing this, engineers can ensure that each individual component is appropriately designed to bear its share of the load and fulfill its intended function. Let’s take a glance at how it typically works:

Breaking Down: Complex structures are divided into smaller, more manageable elements. For example, a multi-story building might be broken down into individual floors, columns, beams, and slabs.

Element Analysis: Each element is analyzed for various parameters like stress, strain, bending moments, shear forces, etc., under different loading conditions. This is often done using mathematical models and equations specific to the type of element.

Boundary Conditions: When analyzing individual elements, it’s essential to consider how they connect to adjacent elements. These connections, or boundary conditions, ensure that the forces and moments are transferred correctly between elements.

Combining Results: After analyzing individual elements, engineers combine the results to get a comprehensive picture of the entire structure’s behavior. This ensures that the structure as a whole can safely support the applied loads.

Structural analysis, as you could guess, is often an iterative process. Engineers might find that changes made to one element can affect others. They’ll go back and forth, adjusting designs until the entire structure is safe and efficient. The primary objective of structural analysis is to design a structure that can resist all applied loads without failing during its intended life. The results of structural analysis are used to verify a structure’s fitness for use, eliminating the need for physical testing.

Example of Structural Analysis

💡 Original Source: *https://arc-engineer.com/what-is-an-example-of-structural-analysis/

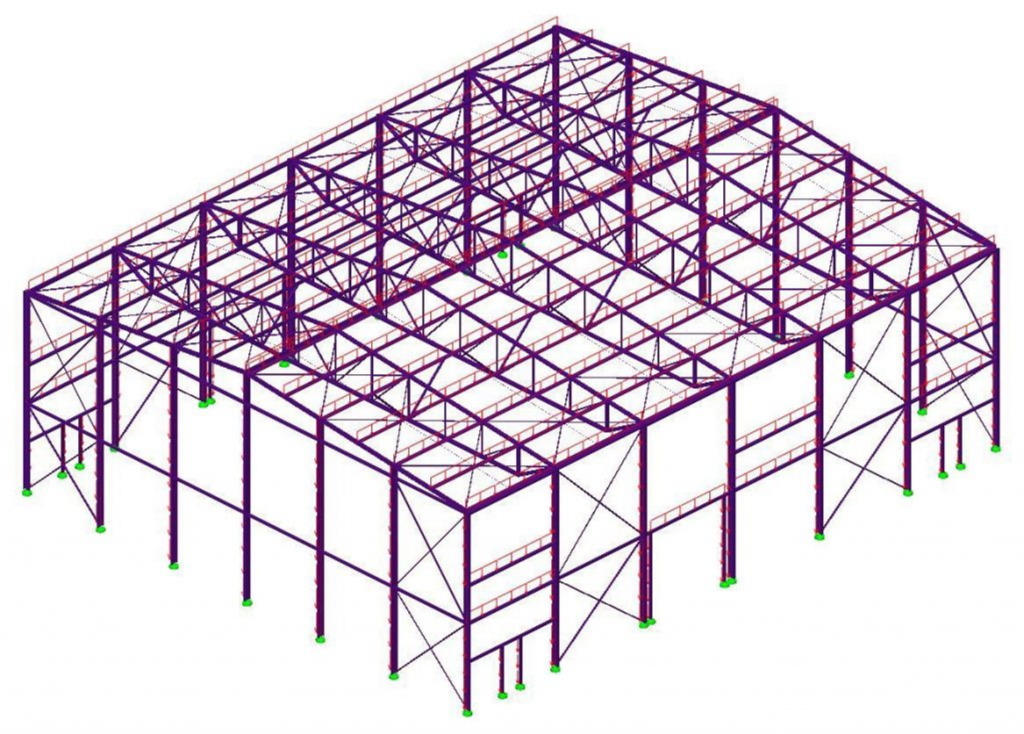

Imagine a warehouse named “Greenville Warehouse” on the outskirts of a town known for its heavy snowfall and gusty winds. This warehouse is primarily used to store heavy farming tractors and ranching equipment.

We start by examining the architectural drawings of the warehouse. A truss system supports the roof, and the entire structure rests on large columns connected by joints.

Loading Conditions: Given the warehouse’s location, we consider three primary loads:

Snow Load: Due to the town’s heavy snowfall. Wind Load: The area is known for its gusty winds. Dead Load: The weight of the structure itself.

Software-Based Analysis: Using FEA software, we input the warehouse’s drawings to create a digital model. This model helps us simulate how the structure will behave under the given loading conditions.

Element-by-Element Examination: With the model ready, we analyze each structural element. We notice that the central roof truss shows signs of excessive stress, especially where it meets the columns. A few beams are under-designed for the weight they carry.

Redesign and Material Replacement: We decided to replace the central roof truss’s material with a stronger steel alloy to better handle the stress. Additional cross-beams are introduced for added support. Two columns near the entrance are repositioned for better load distribution, and a main girder is replaced to ensure safety.

Verification: After making these changes, we run the FEA simulations again. The results are promising; the stress levels in all elements are now within safe limits.

Manual Calculations: To double-check our findings, we perform manual calculations using the strength of materials principles. These calculations confirm our FEA results.

This is a basic example illustrating that structural analysis can be applied to structures like warehouses. Consider this a high-level overview of structural analysis; it’s certainly not as simple as running a few simulations and calling it a day.

💡 Introduction to Structural Analysis if you are interested to learn more: *https://eng.libretexts.org/Bookshelves/Civil_Engineering/Structural_Analysis_(Udoeyo)/01%3A_Chapters/1.01%3A_Introduction_to_Structural_Analysis*

Structural Analysis in Complex Systems

A complex system is a system composed of many components or agents that may interact with each other, often in non-linear ways, leading to emergent behavior that is not easily predictable by analyzing individual components in isolation. These systems can adapt to their environment, and evolve over time, and their behavior is often difficult to model due to the intricate interdependencies among their parts.

Even though, complex systems and structural engineering both involve the study and understanding of systems, they differ fundamentally in their nature, scope, and behavior. A complex system is typically characterized by a large number of interacting components, non-linear interactions, emergent behavior, and adaptability to changing conditions. These systems often exhibit behavior that is not easily predictable based solely on the properties of individual components. The interactions and feedback loops in complex systems can lead to self-organization, adaptation, and sometimes chaotic behavior.

In comparison, a civil engineering structure, such as a bridge, building, or dam, is a man-made construction designed for a specific purpose, like transportation or habitation. These structures are meticulously planned and built to withstand specific loads and environmental conditions. While their behavior, influenced by factors like materials, loads, and environmental conditions, is generally well-understood and predictable now, this hasn’t always been the case. Part of this evolution in understanding is a matter of time, experience, and learning.

For instance, the period from the late 19th to the early 20th century was marked by a trend of bridges collapsing. This era of trial and error highlights the learning curve that civil engineering has undergone. Two primary reasons contributed to these failures: firstly, working with the new bridge-building material of steel, whose properties were not completely understood, posed unforeseen challenges. Secondly, engineers and scientists were slow to recognize that the principle of resonance, well-known from acoustics, was a general phenomenon also applicable to mechanical systems, such as bridges.

💡 The Tacoma Narrows Bridge collapse occurred on November 7, 1940, in Washington State, USA. It was a suspension bridge that dramatically failed due to aeroelastic flutter caused by strong wind conditions. This phenomenon led to the bridge oscillating and eventually tearing apart.

💡 In the article "Towards a Practice of Token Engineering," Trent McConaghy explains the collapse of the bridge, stating, "How did the bridge collapse? The designers did anticipate for wind, after all. However, they failed to anticipate that the particular wind patterns would set up resonance with the bridge itself. When you have an appropriately timed force applied to a system in resonance, the amplitude of the resonance grows over time." The full article can be found at https://blog.oceanprotocol.com/towards-a-practice-of-token-engineering-b02feeeff7ca.

Nowadays, engineers employ principles from physics and mathematics to design structures that are safe and durable. This includes the foundational use of Euclidean geometry and the understanding that forces in structures are typically additive. They provide a mathematical framework for designing buildings, bridges, and other structures with precision and predictability. This was especially important with the advent of modern materials and construction techniques, which allowed for more complex and ambitious structures.

Unlike complex systems, where emergent behavior is a hallmark, modern civil engineering structures are designed to avoid unexpected behaviors. Emergent behaviors, like an unforeseen resonance in a bridge, are usually considered a design flaw, a lesson learned from historical experiences. Such flaws, prevalent in the past, underscore the importance of the well-established principles of geometry and physics in the design and construction of these structures, and how the field has evolved through experience and improved understanding.

💡 Civil engineering structures and complex systems differ significantly in their goals and the nature of those goals. The primary goals of civil engineering structures are rooted in functionality, safety, durability, and cost-effectiveness.

Complex systems, on the other hand, might not have a single, predefined goal. Instead, they often exhibit multiple, sometimes competing, objectives. For example, an ecosystem’s goals might include species survival, nutrient cycling, and resilience to external shocks. These goals can be interdependent and might change over time based on internal and external factors.

You might be wondering, “Is it worthwhile to delve into complex systems and deconstruct their components and interrelations?”

It’s complex and dynamic. Interventions can sometimes result in unintended side effects due to the nonlinear and interconnected characteristics of complex systems. While modeling and assumptions offer valuable insights, they often simplify reality. Relying too heavily on them without accounting for real-world nuances can lead to mistakes. Furthermore, complex systems often span multiple disciplines, necessitating collaboration across different fields. Even with all these tricky properties, the structural analysis of a complex system can yield positive results:

Deeper Understanding: It provides an advanced understanding of the system’s architecture and the relationships between its components, leading to insights that might not be apparent from a superficial examination.

Predictive Power: By understanding the structure and interactions within a system, one can predict its behavior under various conditions, even if those conditions have not been previously observed.

Identification of Critical Components: Structural analysis can identify components that are crucial for the system’s functioning, which can be targeted for intervention, protection, or optimization.

Optimization: With a clear understanding of the system’s structure, one can optimize its performance, resilience, or other desired outcomes.

Informed Interventions: By understanding the system’s structure, interventions (like changes, additions, or removals) can be made in a more informed and effective manner.

Risk Management: Structural analysis can help in identifying vulnerabilities or potential points of failure in a system, aiding in risk assessment and management.

Complexity of Token Economies

If you haven’t understood where I was heading until now, let me share a little secret: token economies are also complex systems! (Crazy, I know). Many parallels can be drawn between complex systems and token economies.

Interactions Leading to Collective Behavior

Feedback Loops

Adaptability

Network Effects

Non-linearity

Interdependencies

Emergence

just to name a few.

💡 Shermin Voshmgir and Michael Zargham, in their paper "Foundations of Cryptoeconomic Systems," discuss how token economies are complex systems. "Blockchain networks and similar cryptoeconomic networks are systems, specifically complex systems. They are adaptive networks with multiscale spatio-temporal dynamics. Individual actions may be incentivized towards a collective goal with 'purpose-driven' tokens. Blockchain networks, for example, are equipped with cryptoeconomic mechanisms that allow the decentralized network to simultaneously maintain a universal state layer, support peer-to-peer settlement, and incentivize collective action." The full paper is available at https://research.wu.ac.at/en/publications/foundations-of-cryptoeconomic-systems-6.

Structural Analysis in Token Economies

Let’s examine how structural analysis can be applied to token economies to identify weak links, vulnerabilities, and potential opportunities.

Non-Technical

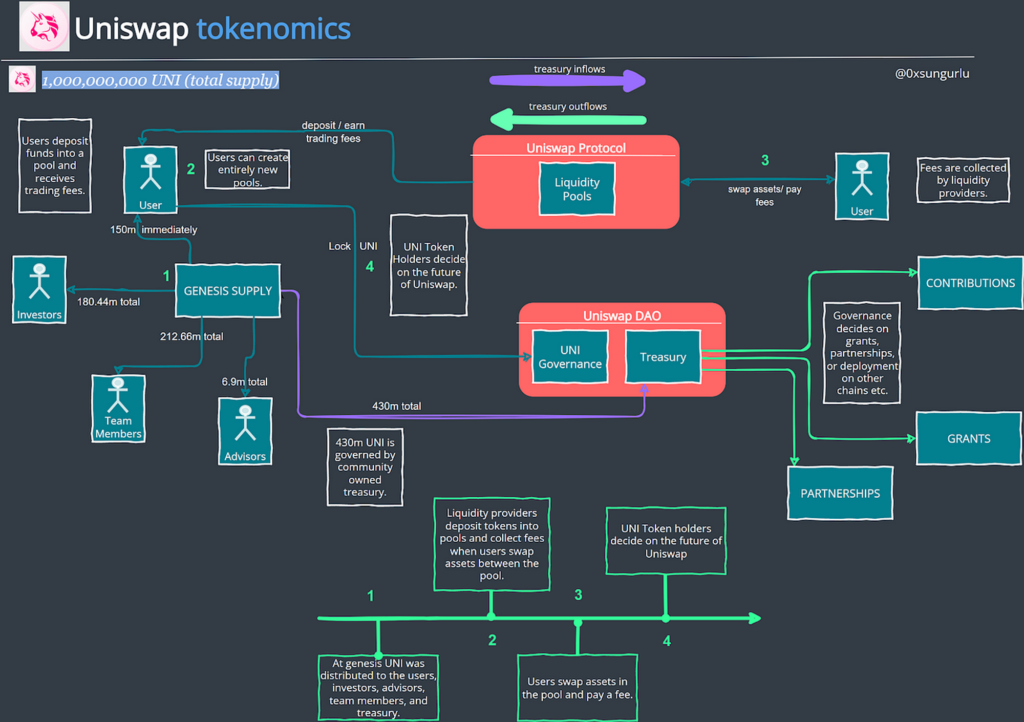

Mapping the System: The first step in structural analysis is to map out the system, representing components as nodes and their interactions as links. This creates a network representation of the system, making it easier to visualize and analyze.

*https://tokenomicsdao.xyz/blog/tokenomics-101/tokenomics-101-uniswap-uni/*

Identifying Central Elements: By analyzing the network, one can identify elements that have a high degree of connectivity or centrality. These elements often play crucial roles in the system’s functioning. If they fail or are compromised, the impact can ripple through the system. Conversely, strengthening or optimizing these nodes can lead to significant system-wide improvements.

We can use the example of Uniswap, whose token value flow is depicted above:

Liquidity Pools: At the heart of Uniswap are its liquidity pools. These pools contain reserves of two tokens and provide the primary mechanism for trading. Liquidity providers deposit tokens into these pools in exchange for a share of the trading fees.

Users/Traders: Individuals or entities that interact with the Uniswap platform to swap tokens. Their trading actions, influenced by market conditions and token prices, drive the dynamics of the liquidity pools.

Liquidity Providers: These are users who deposit tokens into liquidity pools. They earn trading fees in return but also take on the risk of impermanent loss. Their decisions to add or remove liquidity can impact the depth of the market and the stability of token prices.

Arbitrageurs: Individuals or bots that take advantage of price discrepancies between Uniswap and other exchanges. Their actions help keep prices on Uniswap in line with the broader market.

Governance Token (UNI): UNI is Uniswap’s governance token, allowing holders to vote on proposals related to the platform’s development, changes, and future direction.

Fees: A small fee is taken from each trade on Uniswap, which is then distributed to liquidity providers as a reward for supplying their tokens to the pools.

Smart Contracts: Uniswap operates through a series of Ethereum smart contracts that automate the trading process, manage liquidity pools, and ensure that all transactions are trustless and decentralized.

Price Oracle: Uniswap uses a mechanism to determine the relative price of tokens in its pools. This is crucial for executing trades and ensuring fair pricing. The price is determined by the ratio of the reserves of the two tokens in a pool.

External Factors: ****These include the broader Ethereum network’s state (e.g., gas prices, network congestion), competing DEXs and centralized exchanges, regulatory environment, and overall crypto market sentiment.

At this point, even if you’ve overlooked some crucial elements, you can begin to delve deeper and pose important questions about system elements and their interactions. For instance, how would higher fees impact system agents such as traders, liquidity providers, arbitrageurs, and token holders? By identifying system elements, interactions, and posing questions, you can amass a wealth of information and identify further avenues to explore, such as searching for feedback loops and vulnerabilities.

Analyzing Feedback Loops: Feedback loops are cycles where outputs from one part of the system influence inputs in another part, which in turn affects the original output. By identifying and studying these loops, one can understand the system’s self-regulating mechanisms and potential points of amplification or instability.

💡 Original Source: https://fs.blog/mental-model-feedback-loops/

There are two types of feedback loops: positive and negative. Positive feedback amplifies system output, resulting in growth or decline. Negative feedback dampers output stabilizes the system around an equilibrium point.

We can observe a well-known positive feedback loop in the token economy of the NFT marketplace, LooksRare.

Token incentives were introduced in the LooksRare marketplace to encourage specific behaviors. Users earned LOOKS (the marketplace token) rewards for trading NFTs and could lock up LOOKS to become eligible for 100% of the marketplace fees. The marketplace charged a 2% fee, which is distributed directly to those staking. Also, staking was further incentivized with the native LOOKS token.

💡 @mattyTokenomics explores the LooksRare feedback loop in his tweet: "This behavior is generally not healthy for a platform. For example, on LooksRare as much as 90% of the activity is wash trading, with traders doing so to earn $LOOKS rewards that are worth more than the fees they pay. They then dump the $LOOKS to profit." - Link to the tweet

- Initial State: The marketplace introduces token incentives for trading NFTs and staking.

- Action: Users recognize that by staking a significant portion of LOOKS tokens, they can earn more incentives. This leads to the desire to maximize staking.

- Reaction: To maximize the rewards from staking, some users resort to wash trading, where they trade NFTs between their own wallets. This action artificially inflates trading volume and generates more fees. The token rewards were more valuable than the fees paid in the marketplace.

- Outcome: The increased fees from wash trading are captured by the marketplace, of which 100% goes directly to the stakers. This means that the very users who are wash trading (and thereby increasing fees) stand to benefit the most, as they receive a portion of these fees.

- Reinforcement: On top of the fees, staking is further incentivized with the native token, LOOKS. This means that the more a user engages in wash trading, the more fees they generate, leading to more rewards both in terms of a share of the fees and additional LOOKS tokens.

- Repeat: The loop continues as users are further incentivized to engage in wash trading to maximize their rewards, leading to even more fees and, consequently, more rewards.

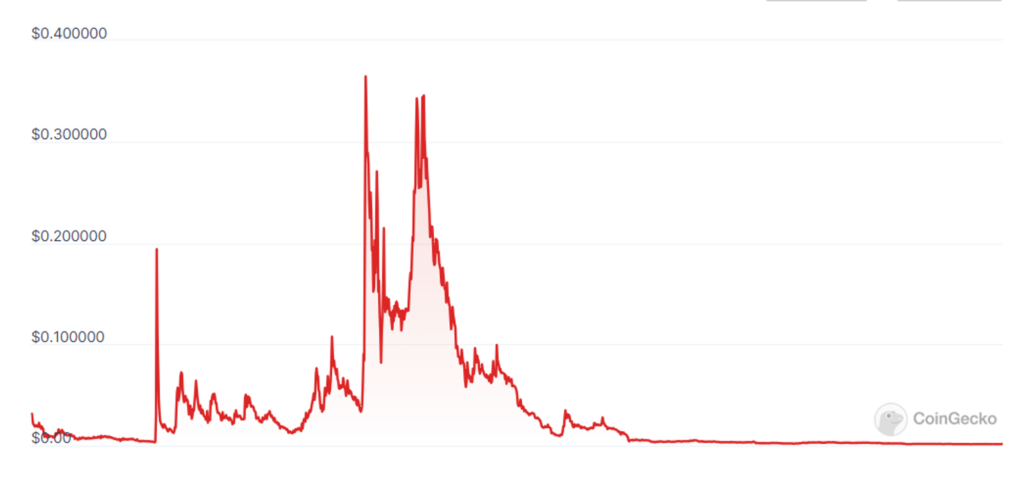

Although the initial success of introducing token incentives for LooksRare was significant— even surpassing OpenSea, a behemoth in the NFT marketplace, in trading volume—it highlighted the power of incentives. However, the nature of these incentives (wash trading/speculative behavior) wasn’t sustainable or genuine. This led to a drastic drop in token price when a large portion of the token supply entered the markets from speculative hands.

*https://defillama.com/protocol/looksrare?unlocks=true*

For more information about recognizing and analyzing different type of feedback loops in token systems, we recommend Module 2 of the Token Engineering Fundamentals course.

Empirical Analysis: Empirical analysis utilizes data or scenarios from projects similar to the one under review to understand how the system might behave in real-world conditions. The benefit of this approach is that it can offer “soft proof” of whether a particular design might succeed or fail, based on its existence in other projects.

We can examine a well-known example of hyperinflation from Axie Infinity, one of the first play-to-earn (P2E) games in history.

Players in Axie Infinity could earn in-game rewards, called Smooth Love Potion (SLP), through various gameplay activities, making it one of the first games to financially incentivize its players. A key feature of the game is the ability to breed and enhance virtual pets called “axies” which can generate additional SLP.

Axie breeding and upgrading were designed as ‘sinks‘ to remove SLP from circulation, helping maintain the token’s value by counterbalancing the supply. However, for these actions to remain attractive to players, they needed to yield more SLPs in the future. This effectively transformed the intended ‘sinks’ into larger ‘faucets,’ increasing the SLP supply in circulation rather than reducing it.

As a result of this unintended consequence, the SLP token experienced excessive inflation, leading to a significant decline in its value. The increased supply of SLP outpaced the demand for the token, putting downward pressure on its price. This use case highlights the importance of carefully balancing tokenomics to ensure a sustainable project economy.

Technical

Studying Emergent Behavior: By simulating the system’s behavior based on its structure and interactions, one can predict emergent behaviors. These behaviors, which arise from the collective interactions of the system’s components, can be opportunities or threats, depending on the system’s context and goals.

In the summer of 2022, Bancor suspended its DeFi Impermanent Loss (IL) insurance due to a hostile market environment.

💡 According to a Bancor blog post, "Due to hostile market conditions, Bancor’s Impermanent Loss Protection is temporarily disabled. IL protection is expected to be reactivated on the protocol as the market stabilizes. This is a temporary measure to protect the protocol and its users" (Bancor Network, 2022).

Impermanent loss occurs when a user provides liquidity to a pool, and the value ratio of their deposited assets changes over time, often leaving investors with a greater quantity of the devalued token. Bancor had been using its protocol-owned liquidity to fund impermanent loss protection, staking its native token, BNT, in pools, and utilizing the accumulated fees to reimburse users for any temporary loss.

The suspension of IL protection was necessitated by the sell-off of reward emissions accumulated over the previous 18 months. The rewards in BNT had a dual cost:

- They depreciated the BNT value, increasing the impermanent loss on the network.

- This IL is compensated with additional BNT emissions, causing sales that result in further value depreciation.

This issue was compounded by the insolvency of significant centralized entities, who were primary beneficiaries of BNT liquidity mining rewards as long-time liquidity providers in Bancor. To settle their liabilities, these entities quickly sold off their BNT holdings and withdrew substantial liquidity from the system, exposing an economic vulnerability within the protocol. This triggered a cascading effect, as the declining price prompted further withdrawals and sales, driving the price down even more and causing considerable market disruption.

💡 Reference: Bancor Network. (2022, June 19). Market Conditions Update [Blog post]. Retrieved from https://blog.bancor.network/market-conditions-update-june-19-2022-e5b857b39336

Scenario Analysis: With a structural model in hand, one can simulate various scenarios to understand how the system responds to different conditions or interventions. This can help in identifying opportunities for optimization or potential threats under specific circumstances.

TerraUSD was one of the largest stablecoins until it lost its peg to the U.S. dollar on May 11, 2022. It relied on a network of arbitrageurs to maintain this peg by buying and selling Terra’s volatile cryptocurrency, LUNA. To acquire UST, one first had to mint it, exchanging LUNA at the current rate. The protocol would then burn the received LUNA, reducing its supply and slightly increasing its price. Conversely, minting LUNA required converting UST stablecoins, which were then burned, resulting in a marginal increase in UST’s price.

As the price of LUNA falls, you increasingly require more LUNA to equate to $1 when swapping 1 UST for $1 worth of LUNA, which results in minting even more LUNA. Eventually, due to dillution LUNA’s price dropped so low that there was insufficient liquidity to accommodate the inflow of UST.

💡 "UST’s tokenomics were optimized for increasing the market cap of UST above everything else, offering a heavily advertised 20% APY unconditionally. Consequently, its tokenomics lacked any mechanism to cope with the devaluation of LUNA below a critical point. UST's model naively depended on each LUNA token maintaining a minimum value. As the supply of UST expanded, the required value per LUNA escalated, rendering the protocol increasingly fragile and volatile as its size increased." MattyTokenomics.[Modeling & Optimization]. Retrieved from https://tokenomics-guide.notion.site/2-6-Modeling-Optimization-bcaab2f1ee2c4cceb6371ce29d3e1827

💡 “Risks of insolvency increase when UST adoption outpaces the growth of the LUNA market price. Based on the average inflow of $121 million per day over the last month, the circulating supply of LUNA needs to increase by $0.33 per token every day to maintain backing. If it's less, the system edges towards a Minsky moment—a sudden market collapse.” @0xHamz on Twitter. (2022, March 22). [Thread on UST and LUNA's market dynamics]. https://web.archive.org/web/20220322205103/https://twitter.com/0xHamz/status/1506372692005593090

Game-theoretic: Simulating game-theoretic analysis involves creating mathematical models that represent the strategic interactions between agents within a system. These models include the preferences, possible strategies, and payoffs for each participant. By applying game theory, one can predict equilibrium states of the system, such as Nash equilibrium, where no participant can benefit from changing their strategy unilaterally.

Olympus is a reserve currency protocol designed to establish OHM as a decentralized, censorship-resistant reserve currency within the Web3 ecosystem. OHM is a treasury-backed currency, fully collateralized by a basket of decentralized assets such as DAI, FRAX, ETH, BTC, and others.

Staking in Olympus was a means for OHM holders to earn rewards and functioned as the primary value accrual strategy for OlympusDAO. It entailed users locking their OHM tokens into the protocol in exchange for rewards. These rewards were distributed every eight hours, with the protocol issuing new OHM tokens into the staking pool. A rebase process subsequently adjusted the staked OHM balance in each user’s account, ensuring their sOHM holdings corresponded with the expanded staking pool.

The Olympus protocol applied a fundamental game theory model as a marketing strategy, which was effective for some time. Within this framework, consider a scenario with two Olympus users, each facing three choices: stake, bond, or sell OHM. Staking considered the most beneficial action, is assigned a value of +3, bonding a moderate +1, and selling a detrimental -1, reflecting the impact of these actions on the ecosystem’s health.

When both users act, the sum of their actions’ values indicates the overall benefit to the ecosystem. The goal is to achieve the highest combined value for the best collective outcome.

Initially, the Olympus team promoted the idea that the most beneficial scenario, rated at (3,3) with a combined value of 6, would occur when both users stake their OHM. However, this game theory model was overly simplified and did not consider that long-term holders might eventually be more inclined to sell than new entrants. This oversight contributed to a significant price drop as the dynamics played out.

💡 "To sustain this APY, the protocol issues new OHM, effectively diluting the value of OHM in the process (akin to what happens with stock splits, where true value isn't created). Despite this, the allure of high APY drew many investors. Since the sole way to collect these rewards was through staking OHM within the protocol (with historically over 90% of OHM staked), a significant portion of the asset supply was locked up, bolstering the price and stimulating demand. This mechanism gave rise to Olympus' renowned game theory strategy (3,3). When faced with three actions—staking, bonding, or selling—between two investors, the most valuable collective strategy would be for both to stake OHM, resulting in a higher OHM price and increased holdings for everyone involved." Griffith, G.. DeFi’s Disruptive Potential — Remembering Olympus DAO. Retrieved from https://medium.com/blockchain-biz/defis-disruptive-potential-remembering-olympus-dao-9b530bb8b0f9

Conclusion

Structural analysis stands as a highly beneficial tool in dissecting and understanding token economies, as it allows for an in-depth examination of the complex layers within digital economic structures. However, it is not without limitations. Structural analysis cannot fully capture all protocol risks, partly due to the inherent limits of human cognitive capacity. While it may identify elements of a complex system and their interactions, fully comprehending the extensive range of interrelations and edge-case scenarios remains a significant challenge.

Therefore, structural analysis should be regarded as an instrumental resource in substantially reducing the areas vulnerable to economic and technical attacks, but not as a cure-all for eliminating all potential threats. It acts as a vigilant guardian rather than an infallible one. With the ongoing enhancement of modeling and verification tools, we can expect structural analysis to become an even more powerful asset, extending beyond its present scope and offering deeper, more precise insights into the dynamics of token economies.

About the main author

Linas is a tokenomics researcher at Hacken, with a background in cryptocurrency and fintech consultancy. Having worked on over 20 projects, his expertise in economic design is showcased in his published work on tokenomics audit procedures. Linas melds his industry experience with structural analysis and a focus on risk assessment to assist crypto startups in building robust, sustainable economies.

- What is the F-1 measure and why is it useful for imbalanced class problems?

- From Data Streams to Decentralized Worlds: Exploring the Role of Blockchain Subgraphs in Gaming Infrastructure

- How to predict customer churn using machine learning, data science and survival analysis

- Business models in data science and AI