Tokenomics and AI

Artificial Intelligence (AI) has made profound inroads across industries today, demonstrating substantial impact. Among AI advancements, Large Language Models (LLMs) have garnered significant attention, surpassing their initial application in linguistic tasks.

This article investigates AI’s influence on token model creation and engineering, covering discernible use cases and their associated limitations. In particular, we delve into self-balancing token economies, leveraging Large Language Models (LLMs) for token economy creation.

Background Information (Introduction to token engineering)

Tokenization can be described as the encapsulation of value in tradeable units of account, called tokens or coins [1]. Its disruptive potential lies in the ability to expand the concept of value that can be distributed and traded beyond purely economic terms, including reputation, work, copyright, utility, and voting rights. Once tokenized, all these manifestations of value can be detected, accounted for, and leveraged in the context of a system of incentives that may promote fair levels of wealth and power redistribution [2].

In other words, tokenization represents a form of digitalization of value and, just like the Internet enabled free and fast circulation of digitised information, the blockchain is allowing the “almost free” [3] and borderless flow of digitised value.

Token engineering however, involves carefully crafting and refining the economic mechanisms and incentives within a token ecosystem, aiming to align the interests of participants, foster network effects, and drive desired behaviours [4].

The scope of token engineering goes beyond token creation, including:

- Adoption: covers the ease of access to, and overall user experience of the token ecosystem to optimise engagement among members.

- Data Analysis and Modelling: the design of the token ecosystem is managed, assessed, and improved using data analytics and modelling techniques. Feedback is implemented based on iterative empirical insights.

- Ethical Considerations: The design and deployment of token ecosystems incur ethical and social implications that need to be appropriately addressed to maintain integrity, privacy, and sustainability.

- Governance: creating the frameworks for managing communities and decentralising decision-making within a tokenized system.

- Incentive structures: aligning chosen incentives to promote relevant behaviours and actions within the ecosystem.

- Security and Auditing: to safeguard the interests and integrity of the token ecosystem, mitigating risks associated with hacking, fraud, or misuse.

- Smart Contract Deployment: to automate the framework of a token ecosystem.

Effective token ecosystem design is vital for decentralised platforms and applications. Token design, distribution, and usage profoundly impact stakeholders, including users, developers, investors, and the community. Tokens incentivize active participation in these ecosystems.

Well-structured tokenomics align participants’ interests, encouraging resource contributions and fostering a vibrant community [5]. Tokens facilitate seamless value exchange, enabling economic transactions within the ecosystem. They also support community governance through voting, enhancing decentralisation.

Transparent token distribution and clear economics promote fairness, integrity, and trust, boosting adoption and ecosystem sustainability.

Brief overview of AI and it’s broader utility for Token Engineering

Figure 1: Artificial Intelligence

The AI sub-field called “Artificial General Intelligence” (AGI) is most relevant. AGI is about autonomous agents interacting in an environment. AGI can be modelled as a feedback control system.

‘The launch of ChatGPT marked a new era in the field of natural language processing and AI. We expect AI in practical token engineering applications very soon. Typically, these applications are developed as proprietary services and tools. In electrical engineering and circuit design, AI-powered CAD is a multi-billion dollar industry’ [6].

LLMs, like GPT-4 enable human language interfaces and automation in complex token engineering software (e.g. Tokenlab), acting as AI co-pilots. To understand the uniqueness of AI DAOs compared to standard DAOs or AI, we start with a foundation in DAOs and AI.

A DAO is a self-executing computational process operating autonomously on decentralised infrastructure, with the ability to manipulate resources. Examples include Bitcoin and Ethereum networks, where DAOs can run on top of other DAOs, like The DAO on Ethereum. These “triggered” DAOs function without human intervention and run on decentralised infrastructure [7].

AI has several definitions: it encompasses fields aiming for narrow intelligence, those striving for general human-level intelligence (AGI), and the resulting technology from these areas. Progress in narrow AI often drives advancements in AGI. An AI DAO utilises AI technology or is AI technology running on decentralised processing infrastructure.

Methodology (Hone in on Self-balancing token economies application)

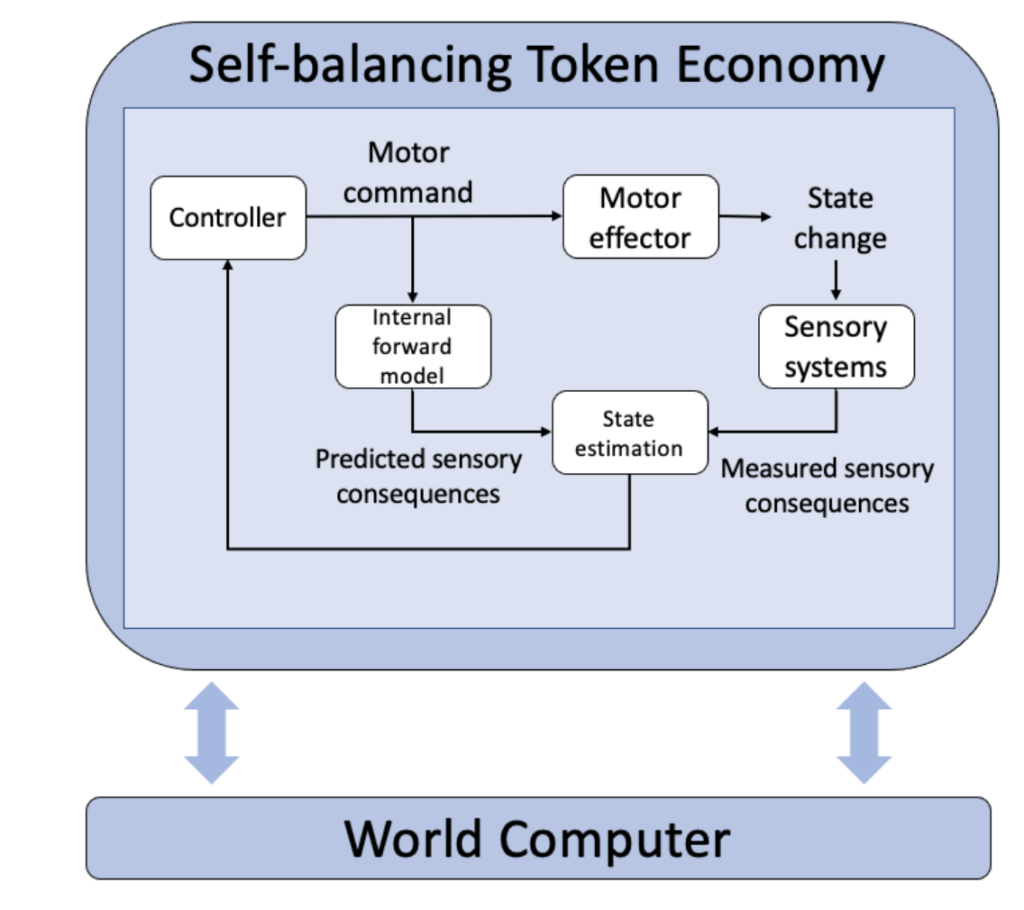

Figure 2: Basic model of a Self-balancing Token Economy

AI has the potential to produce “Self-balancing token economies”, by granting them the ability to:

Analyse data: Analysing data within token economies is a critical use case for AI in token engineering. AI models, powered by machine learning algorithms, are capable of processing vast volumes of data generated within these economies.

- Their primary objective is to identify patterns and sequences that could pose a risk to the stability of the token economy.

- By harnessing AI in this manner, token economies can benefit from an additional layer of security and proactive risk management.

- AI-powered data analysis helps maintain stability, trust, and integrity within these ecosystems, making them more resilient to external threats and internal challenges.

Automated decision making: Tailored user insights driven by machine learning algorithms represent another powerful application of AI in token engineering.

- Overall, AI-driven automated decision-making and tailored user insights greatly enhance the efficiency, effectiveness, and user experience within token economies.

- By leveraging machine learning algorithms, these ecosystems can make data-driven decisions that optimise token supply, pricing mechanisms, and user engagement, ultimately contributing to their long-term sustainability and success [9].

Community maintenance: LLMs can play a significant role in enhancing community engagement and communication by analysing sentiment, generating content, and automating responses to user queries.

- Their ability to understand and generate human-like text enhances community engagement and communication, ultimately leading to a more vibrant and informed user community.

Ethics: play a crucial role in the design and operation of token economies, and LLMs can aid in addressing ethical considerations, serving as valuable tools for addressing ethical considerations within token economies by detecting and mitigating biases, providing fairness recommendations, and promoting transparency.

- However, their role should be complemented by expert oversight and a commitment to ethical design principles from the outset to ensure the veracity of their recommendations and ethical alignment with the ecosystem’s goals.

Governance: Smart contracts paired with automated decision making would enable decentralised governance systems where machine learning algorithms participate in voting, evaluating, and decision making, allowing for efficient, transparent, automated governance.

- Although human input would be required at the onset, AI would automate governance, mitigating the potential risks of human interference in fair decision making.

Market predictions and optimisation: Machine learning algorithms excel at analysing extensive market data, including historical price movements, trading volumes, and user behaviours.

- By processing this data, these algorithms can identify patterns, trends, and potential market shifts.

- Predictions could be utilised to optimise token allocation, liquidity provision, and risk management strategies within the token economy.

- This serves as the foundation of what we call “Self balancing token economies”, where predictions are used to regulate and automate decision making within the token ecosystem.

- Once initiated, these self balancing token economies will be able to efficiently scale while remaining in alignment with collective interests.

Risk Management: Self-balancing ecosystems continuously analyse transaction patterns, user behaviours, and network activity within the token economy. This data provides valuable insights into the ecosystem’s health and potential vulnerabilities.

- These insights allow the system to detect and mitigate risks, including Sybil attacks, to safeguard the integrity of the ecosystem.

- Providing real-time threat detection and dynamic responses, enhancing the trust and security of the token economy while promoting community involvement in risk management decisions.

Simulations: Combining the APIs of LLMs (e.g. ChatGPT API) with independent tokenomics libraries, opens up new possibilities for democratising token simulations.

- It makes these simulations accessible to a wide range of users, from non-coders to skilled professionals, and encourages learning and experimentation in the field of token economics.

- This approach has the potential to drive innovation and expand the understanding of token ecosystems across various domains.

Tokenomics Design: LLMs are adept at processing large volumes of data and conducting scenario modelling. They can analyse historical data, market trends, and user behaviours to gain insights into token economics parameters.

- This data-driven approach helps in designing token economies that align with the interests of their members.

- Taking this a step further, the integration of LLMs in tokenomics design facilitates data-driven decision-making, optimization of economic parameters, and the creation of simulations for bespoke use cases.

- For instance, utilising natural language, it is possible to train an AI using data from specialised online sources (e.g. Coingecko) to create simulations for bespoke use cases.

- This allows for unique use cases where one or multiple self-balancing token economies could be coordinated to fulfil distinct roles in unison, granting access to more complex and creative synergetic solutions with the potential to drive the evolution of token economies in exciting and impactful ways.

Implementation/Adoption

Collectively the application of AI in token engineering translates to self-owned, self-accumulating AIs [8].

Having explored the numerous benefits of AI-powered token engineering, it is worth noting that this combination has its limits, namely;

Biases and Ethical Considerations: LLMs rely on vast training datasets that may inadvertently contain biases present in the text data from which they were trained.

- These biases can manifest in the recommendations and outputs generated by LLMs, potentially leading to unfair or discriminatory outcomes within the token economy.

- Addressing these biases are critical for ensuring fairness and inclusivity. It requires a combination of proactive design measures, ongoing monitoring, community involvement, and ethical oversight to mitigate biases and uphold ethical standards within the ecosystem.

Lack of Real-World and Economic Context: In the face of real-world context, LLMs may struggle to understand the intricacies of real-world economic systems as they rely solely on predetermined patterns from training data, often derived from text sources rather than real-world economic experiences.

- To address this limitation, a hybrid approach involving LLMs and human experts is required.

- Human experts can provide real-world context, interpret LLM-generated insights, and make informed decisions based on their understanding of economic dynamics, while LLMs can benefit from continuous learning and adaptation to real-world economic conditions.

- This involves updating training data and models to reflect changing economic landscapes to ensure accuracy and relevance within self-balancing token economies.

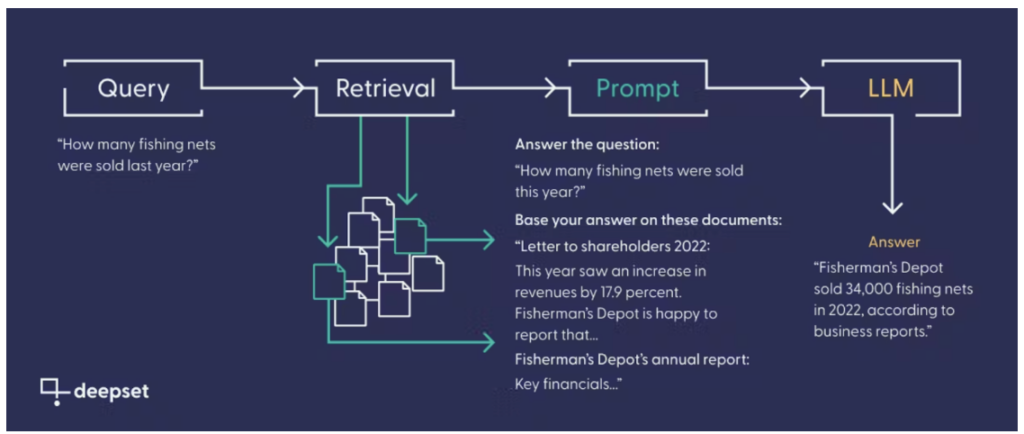

- For example, with the implementation of Retrieval Augmented Generation (RAG), LLMs can establish a foundation rooted in precise, real-time information, augmenting their internal analyses with valuable human insights [9]. This integration ensures the reliability of economic assertions, fostering a climate of trust and credibility within the system.

Figure 3: Sample model for Retrieval Augmented Generation [10]

Uncertainty and Speculation: Dealing with uncertainty and speculation is a notable challenge when integrating LLMs into self-balancing token economies.

- As LLMs generate insights and predictions based on historical data and patterns, they may provide speculative or probabilistic outputs rather than definitive economic forecasts.

- Relying solely on LLM-generated predictions without considering the inherent uncertainty can lead to the dissemination of potentially misleading information. Users and stakeholders within the token economy may make decisions based on these predictions, which could have unintended consequences.

- Authenticating and verifying LLM-generated insights through empirical data, expert analysis, and probabilistic outputs is essential to ensure informed decision-making and reduce the potential risks associated with speculative predictions.

Over-Reliance on Technical Solutions: LLMs simply cannot, and should not entirely replace human input in token engineering.

- Human expertise, with its capacity for critical thinking, creativity, and ethical consideration, remains indispensable in token engineering to ensure a balanced and holistic approach.

Conclusion

In summary, this paper highlights culpable use cases for AI powered token engineering, and its implementation in self-balancing token economies. While we acknowledge the significant potential AI holds, the implementation of self-balancing token economies will require careful consideration of ethical, transparency, and governance aspects to avoid encoding human bias into automated decision making.

We foresee this obligating formal mechanisms for ongoing monitoring, auditing, and community involvement to ensure the fairness and long-term sustainability of self-balancing token economies, safeguarding them against the inadvertent encoding of human bias into automated decision-making processes.

As both Large Language Models (LLMs) and tokenomics continue to advance, we can envision a future where the possibilities for token engineering and other transformative innovations are boundless. The responsible and thoughtful integration of AI into these ecosystems promises to drive positive change, ushering in a new era of decentralised, self-balancing economies that serve the interests of a diverse and global community.

Acknowledgements

We express our heartfelt gratitude to the contributors who played a vital role in this research paper on AI and Tokenomics.

Oritsebawo Ikpobe, an award-winning author in blockchain, drove this research with insights and industry expertise.

Dr. Stylianos Kampakis, a distinguished researcher, expanded our knowledge and commitment to excellence by providing invaluable guidance and expertise.

This paper is a testament to their collaborative efforts at the intersection of AI and Tokenomics.

References

[1] D. Easley, M. O’Hara, and S. Basu, “From mining to markets: The evolution of Bitcoin transaction fees,” Journal of Financial Economics, vol. 134, no. 1, pp. 91–109, 2019. doi:10.1016/j.jfineco.2019.03.004.

[2] P. Freni, E. Ferro, and R. Moncada, “Tokenomics and blockchain tokens: A design-oriented morphological framework,” Blockchain: Research and Applications, vol. 3, no. 1, p. 100069, 2022. doi:10.1016/j.bcra.2022.100069.

[3] P. Freni, E. Ferro and R. Moncada, “Tokenization and Blockchain Tokens Classification: a morphological framework,” 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 2020, pp. 1-6, doi: 10.1109/ISCC50000.2020.9219709.

[4] U. Sharomi, “Token Engineering: The application of large language models,” Medium, https://medium.com/coinmonks/token-engineering-the-application-of-large-language-models-149cb56f28c [Accessed Aug. 26, 2023].

[5] O. Letychevskyi, “Creation of a self-sustaining token economy,” The Journal of The British Blockchain Association, vol. 5, no. 1, pp. 1–7, 2022. doi:10.31585/jbba-5-1-(4)2022

[6] Gideonro et al., “Joint Tea-Tec Plan for AI-powered Token Engineering and $TEC utility,” Token Engineering Commons, https://forum.tecommons.org/t/joint-tea-tec-plan-for-ai-powered-token-engineering-and-tec-utility/1231 [Accessed Aug. 26, 2023].

[7] T. McConaghy, “Ai Daos, and three paths to get there,” Medium, https://medium.com/@trentmc0/ai-daos-and-three-paths-to-get-there-cfa0a4cc37b8 [Accessed Jul. 6, 2023].

[8] T. McConaghy, “Blockchains for Artificial Intelligence,” Medium, https://blog.oceanprotocol.com/blockchains-for-artificial-intelligence-ec63b0284984 [Accessed: Jul. 6, 2023].

[9] K. Martineau, “What is retrieval-augmented generation?,” IBM Research Blog, https://research.ibm.com/blog/retrieval-augmented-generation-RAG [Accessed: Oct. 11, 2023].

[10] “Always retrieval augment your LLM,” Always Retrieval Augment Your LLM, https://www.deepset.ai/blog/llms-retrieval-augmentation [Accessed Oct. 12, 2023].